The Scrapy shell is ideal for quick selector tests, while a Scrapy spider turns that work into a repeatable crawl that can run manually or on a schedule. Keeping crawling and extraction logic in a spider makes selector and parsing changes easier to track as sites evolve.

A Scrapy project provides scrapy.cfg, global settings, and a spiders module that contains spider classes. Each spider defines a name plus starting requests (start_urls or start_requests()) and callback methods such as parse() that receive Response objects and yield items or additional Request objects.

Spider and project names become Python identifiers and must be unique within a project. Crawling behavior is controlled by settings such as ROBOTSTXT_OBEY, download delays, concurrency, and user agent; overly aggressive settings can cause blocking or unintended load on the target site.

Related: How to use Scrapy shell

Related: How to use CSS selectors in Scrapy

Steps to create a Scrapy spider:

- Launch a terminal application.

- Change to the directory that will contain the new Scrapy project.

$ cd /root/sg-work

- Install Scrapy if it is not already installed.

Related: How to install Scrapy on Ubuntu or Debian

Related: How to install Scrapy using pip - Create a Scrapy project.

$ scrapy startproject simplifiedguide New Scrapy project 'simplifiedguide', using template directory '/usr/lib/python3/dist-packages/scrapy/templates/project', created in: /root/sg-work/simplifiedguide You can start your first spider with: cd simplifiedguide scrapy genspider example example.comThe project name becomes the top-level directory and Python package (simplifiedguide).

- Change to the project's spiders directory.

$ cd simplifiedguide/simplifiedguide/spiders/

- Generate a new spider.

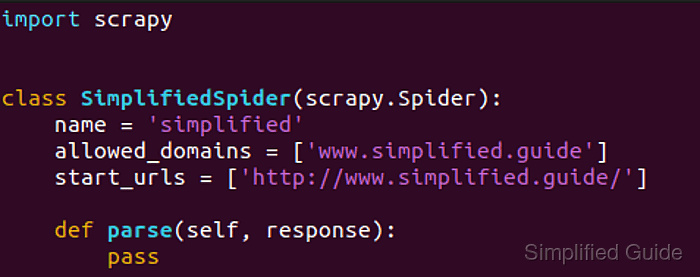

$ scrapy genspider simplified app.internal.example Created spider 'simplified' using template 'basic' in module: simplifiedguide.spiders.simplified

The genspider command takes a spider name plus a domain or URL, generating a skeleton spider from the selected template.

$ scrapy genspider -l Available templates: basic crawl csvfeed xmlfeed $ scrapy genspider example app.internal.example Created spider 'example' using template 'basic' $ scrapy genspider -t crawl scrapyorg scrapy.org Created spider 'scrapyorg' using template 'crawl'

- List the available spiders to confirm the new spider name.

$ scrapy list simplified

- Edit the spider file to implement the parse() callback.

$ vi simplified.py

import scrapy class SimplifiedSpider(scrapy.Spider): name = "simplified" allowed_domains = ["app.internal.example"] start_urls = ["http://app.internal.example:8000/"] def parse(self, response): yield { "title": response.css("title::text").get(), "url": response.url, } - Run the spider to confirm it starts with the current configuration.

$ scrapy crawl simplified 2026-01-01 06:31:41 [scrapy.utils.log] INFO: Scrapy 2.11.1 started (bot: simplifiedguide) 2026-01-01 06:31:41 [scrapy.utils.log] INFO: Versions: lxml 5.2.1.0, libxml2 2.9.14, cssselect 1.2.0, parsel 1.8.1, w3lib 2.1.2, Twisted 24.3.0, Python 3.12.3 (main, Nov 6 2025, 13:44:16) [GCC 13.3.0], pyOpenSSL 23.2.0 (OpenSSL 3.0.13 30 Jan 2024), cryptography 41.0.7, Platform Linux-6.12.54-linuxkit-aarch64-with-glibc2.39 2026-01-01 06:31:41 [scrapy.crawler] INFO: Overridden settings: {'BOT_NAME': 'simplifiedguide', 'NEWSPIDER_MODULE': 'simplifiedguide.spiders', 'ROBOTSTXT_OBEY': True, 'SPIDER_MODULES': ['simplifiedguide.spiders']} ##### snipped ##### - Export scraped items to a file for inspection.

$ scrapy crawl simplified -o simplified.json 2026-01-01 06:31:49 [scrapy.core.engine] INFO: Spider opened 2026-01-01 06:31:49 [scrapy.core.engine] INFO: Closing spider (finished) 2026-01-01 06:31:49 [scrapy.extensions.feedexport] INFO: Stored json feed (1 items) in: simplified.json ##### snipped #####

High concurrency or tight request loops can overload a site or trigger blocking; tune delays and concurrency before scaling up.

- Review the exported file to verify extracted fields.

$ head -n 20 simplified.json [ {"title": "Example Portal", "url": "http://app.internal.example:8000/"} ] - Configure the project settings for the spider as necessary.

Related: Scrapy

Mohd Shakir Zakaria is a cloud architect with deep roots in software development and open-source advocacy. Certified in AWS, Red Hat, VMware, ITIL, and Linux, he specializes in designing and managing robust cloud and on-premises infrastructures.