Scrapy is a Python-based scraping and web crawling program and is generally available as a pip package. However, some Linux distributions like Ubuntu and Debian have Scrapy in its default package repository and can be installed via apt.

Ubuntu version of Scrapy is more tightly integrated with the operating system. It installs to the default application path, and you don't need to install additional tools such as pip to have Scrapy installed.

However, the installed version is normally tied to the distribution version, so you won't get the latest version of Scrapy unless you upgrade your Ubuntu or Debian version.

Scrapy can be installed on Ubuntu and Debian using apt at the terminal.

Related: How to install Scrapy using pip

Steps to install Scrapy on Ubuntu or Debian:

- Launch terminal application.

- Update apt's package list from repository.

$ sudo apt update [sudo] password for user: Hit:1 http://archive.ubuntu.com/ubuntu impish InRelease Hit:2 http://security.ubuntu.com/ubuntu impish-security InRelease Hit:3 http://archive.ubuntu.com/ubuntu impish-updates InRelease Hit:4 http://archive.ubuntu.com/ubuntu impish-backports InRelease Reading package lists... Done Building dependency tree... Done Reading state information... Done All packages are up to date.

- Install python3-scrapy package using apt.

$ sudo apt install --assume-yes python3-scrapy Reading package lists... Done Building dependency tree... Done Reading state information... Done The following additional packages will be installed: ipython3 libmysqlclient21 mysql-common python3-attr python3-automat python3-backcall python3-boto python3-bs4 python3-constantly python3-cssselect python3-decorator python3-hamcrest python3-html5lib python3-hyperlink python3-incremental python3-ipython python3-ipython-genutils python3-itemadapter python3-itemloaders python3-jedi python3-jmespath python3-libxml2 python3-lxml python3-mysqldb python3-openssl python3-parsel python3-parso python3-pickleshare python3-prompt-toolkit python3-protego python3-pyasn1 python3-pyasn1-modules python3-pydispatch python3-pygments python3-queuelib python3-service-identity python3-soupsieve python3-traitlets python3-twisted python3-twisted-bin python3-w3lib python3-wcwidth python3-webencodings python3-zope.interface Suggested packages: python-attr-doc python3-genshi python-ipython-doc python3-lxml-dbg python-lxml-doc default-mysql-server | virtual-mysql-server python3-mysqldb-dbg python-openssl-doc python3-openssl-dbg python-pydispatch-doc python-pygments-doc ttf-bitstream-vera python-scrapy-doc python3-tk python3-pampy python3-qt4 python3-serial python3-wxgtk2.8 python3-twisted-bin-dbg The following NEW packages will be installed: ipython3 libmysqlclient21 mysql-common python3-attr python3-automat python3-backcall python3-boto python3-bs4 python3-constantly python3-cssselect python3-decorator python3-hamcrest python3-html5lib python3-hyperlink python3-incremental python3-ipython python3-ipython-genutils python3-itemadapter python3-itemloaders python3-jedi python3-jmespath python3-libxml2 python3-lxml python3-mysqldb python3-openssl python3-parsel python3-parso python3-pickleshare python3-prompt-toolkit python3-protego python3-pyasn1 python3-pyasn1-modules python3-pydispatch python3-pygments python3-queuelib python3-scrapy python3-service-identity python3-soupsieve python3-traitlets python3-twisted python3-twisted-bin python3-w3lib python3-wcwidth python3-webencodings python3-zope.interface 0 upgraded, 45 newly installed, 0 to remove and 0 not upgraded. Need to get 8,382 kB of archives. After this operation, 49.4 MB of additional disk space will be used. ##### snipped

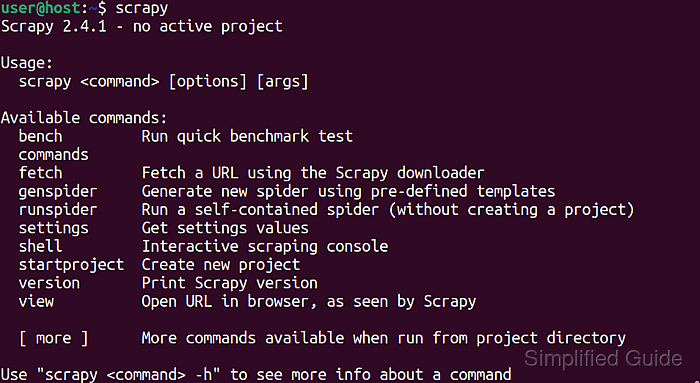

- Run scrapy command at the terminal to test if installation has completed successfully.

$ scrapy Scrapy 2.4.1 - no active project Usage: scrapy <command> [options] [args] Available commands: bench Run quick benchmark test commands fetch Fetch a URL using the Scrapy downloader genspider Generate new spider using pre-defined templates runspider Run a self-contained spider (without creating a project) settings Get settings values shell Interactive scraping console startproject Create new project version Print Scrapy version view Open URL in browser, as seen by Scrapy [ more ] More commands available when run from project directory Use "scrapy <command> -h" to see more info about a command

This guide is tested on Ubuntu:

| Version | Code Name |

|---|---|

| 22.04 LTS | Jammy Jellyfish |

| 23.10 | Mantic Minotaur |

| 24.04 LTS | Noble Numbat |

Mohd Shakir Zakaria is a cloud architect with deep roots in software development and open-source advocacy. Certified in AWS, Red Hat, VMware, ITIL, and Linux, he specializes in designing and managing robust cloud and on-premises infrastructures.

Comment anonymously. Login not required.