GlusterFS volumes mounted on a Linux client behave like a normal filesystem path, making distributed storage available to applications and users without changing workflows. A client mount is commonly used for shared content, backups, and clustered services that need consistent access to the same files.

GlusterFS client mounts typically use a FUSE filesystem, with the mount operation contacting a chosen cluster node to retrieve the volume definition. After the initial contact, the client connects to the required bricks across the cluster to read and write data.

The client requires GlusterFS client packages and reliable network connectivity to at least one cluster node. Hostname resolution via DNS or static /etc/hosts entries is required when mounting by hostname, and firewall blocks can cause mounts to hang or fail. Persistent mounts via /etc/fstab should include _netdev to avoid mounting before networking is available during boot.

Related: How to create a GlusterFS volume

Related: How to export GlusterFS volume as NFS share

Steps to mount GlusterFS volume in Linux:

- Launch a terminal session on a GlusterFS cluster node.

$ hostname node1

- List available GlusterFS volumes on the cluster.

$ sudo gluster volume list volume1

- Note the cluster node hostnames and IP addresses used for client access.

$ cat /etc/hosts 127.0.0.1 localhost 192.168.111.70 node1.gluster.local node1 192.168.111.71 node2.gluster.local node2

- Launch a terminal session on the Linux client.

$ hostname client1

- Open the /etc/hosts file on the client.

$ sudoedit /etc/hosts

- Add the static host entries for the GlusterFS nodes.

192.168.111.70 node1.gluster.local node1 192.168.111.71 node2.gluster.local node2

DNS is preferred for larger environments; /etc/hosts entries are practical for small static clusters.

- Verify hostname resolution for the GlusterFS nodes from the client.

$ getent hosts node1.gluster.local node2.gluster.local 192.168.111.70 node1.gluster.local node1 192.168.111.71 node2.gluster.local node2

- Install the GlusterFS client software on the client.

$ sudo apt update && sudo apt install --assume-yes glusterfs-client Hit:1 http://archive.ubuntu.com/ubuntu jammy InRelease ##### snipped ##### Setting up glusterfs-client ... Processing triggers for man-db ...

On some distributions, the client package is named glusterfs-fuse or glusterfs-client in the distro repositories.

- Create a mount point directory on the client.

$ sudo mkdir -p /mnt/volume1

- Mount the GlusterFS volume manually using the chosen node hostname.

$ sudo mount -t glusterfs node1.gluster.local:/volume1 /mnt/volume1

Use an IP address in place of the hostname when DNS or /etc/hosts entries are unavailable.

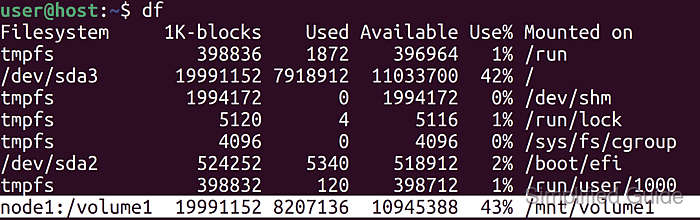

- Verify the mount is successful by checking the mounted filesystem.

$ df -h /mnt/volume1 Filesystem Size Used Avail Use% Mounted on node1.gluster.local:/volume1 20G 8.0G 11G 43% /mnt/volume1

- Unmount the GlusterFS volume when the manual mount is no longer needed.

$ sudo umount /mnt/volume1

- Open the /etc/fstab file on the client to configure persistent mounting.

$ sudoedit /etc/fstab

- Add an entry for the GlusterFS volume in /etc/fstab.

node1.gluster.local:/volume1 /mnt/volume1 glusterfs defaults,_netdev 0 0

An incorrect /etc/fstab line can delay boot until network timeouts expire or drop the system into emergency mode.

Adding x-systemd.automount can reduce boot-time impact on systemd systems by mounting on first access.

- Save the /etc/fstab changes.

- Mount the GlusterFS volume using the /fstab entry.

$ sudo mount /mnt/volume1

- Test if GlusterFS volume is successfully mounted.

$ mountpoint /mnt/volume1 /mnt/volume1 is a mountpoint

- Reboot the system to test automatic mounting at startup.

$ sudo reboot

- Verify the volume is mounted automatically after the system starts.

$ df -h /mnt/volume1 Filesystem Size Used Avail Use% Mounted on node1.gluster.local:/volume1 20G 8.0G 11G 43% /mnt/volume1

Mohd Shakir Zakaria is a cloud architect with deep roots in software development and open-source advocacy. Certified in AWS, Red Hat, VMware, ITIL, and Linux, he specializes in designing and managing robust cloud and on-premises infrastructures.