Creating a GlusterFS volume turns multiple server directories into a single storage namespace that can be mounted by clients and expanded over time. Volume creation is where capacity and redundancy choices become permanent, so a clean layout now avoids fragile fixes later.

A GlusterFS volume is built from bricks, which are export directories hosted by peers in a trusted storage pool. The glusterd management daemon stores the volume definition and propagates it across the pool, while clients fetch a volume configuration file and connect directly to the participating brick servers.

Stable name resolution and firewall access are required between all nodes before volume creation, because management traffic uses fixed ports while brick I/O consumes ports from a configurable range. Brick directories should be empty and placed on dedicated storage (commonly XFS) instead of the operating system filesystem, and replica layouts should include quorum planning to reduce split-brain risk during network partitions.

Steps to create GlusterFS volume:

- Create a trusted storage pool for GlusterFS.

- Confirm that each peer hostname resolves to the expected address.

$ getent hosts node1 node2 10.0.0.11 node1 10.0.0.12 node2

Unresolvable peer names commonly break gluster probe, volume create, and client mounts.

- Verify that all peers show a connected state in the trusted storage pool.

$ sudo gluster peer status Number of Peers: 1 Hostname: node2 Uuid: 8b1b8c1e-0e1b-4a0f-9c7d-0b6d9bb0d5c2 State: Peer in Cluster (Connected)

- Allow network access for required GlusterFS ports on all nodes.

$ sudo firewall-cmd --zone=public --add-port=24007-24008/tcp --permanent success $ sudo firewall-cmd --zone=public --add-port=24007-24008/udp --permanent success $ sudo firewall-cmd --zone=public --add-port=49152-60999/tcp --permanent success $ sudo firewall-cmd --reload success

Replace public with the active firewalld zone when needed.

Brick ports are allocated from base-port to max-port (commonly 49152 to 60999) with one port consumed per brick.

Older releases used 24009+ instead of 49152+, while Gluster 10+ can randomize brick ports within the configured range in /etc/glusterfs/glusterd.vol. - Create directory for the GlusterFS brick on each node.

$ sudo mkdir -p /var/data/gluster/brick

For production, place each brick on a dedicated partition or block device instead of the operating system filesystem.

XFS is the recommended filesystem type for GlusterFS volumes.

- Create the GlusterFS volume from the first node.

$ sudo gluster volume create volume1 replica 2 transport tcp node1:/var/data/gluster/brick node2:/var/data/gluster/brick force volume create: volume1: success: please start the volume to access data

Replicated volumes require each replica brick on a different peer, even when brick paths match across servers.

The force option bypasses brick placement checks and is best avoided unless the brick path is confirmed empty on dedicated storage.Two-way replication (replica 2) can enter split-brain during network partitions; prefer an arbiter or three-way replica layout when consistency must be preserved.

Related: Types of GlusterFS volumes

- Start the newly created volume from the first node.

$ sudo gluster volume start volume1 volume start: volume1: success

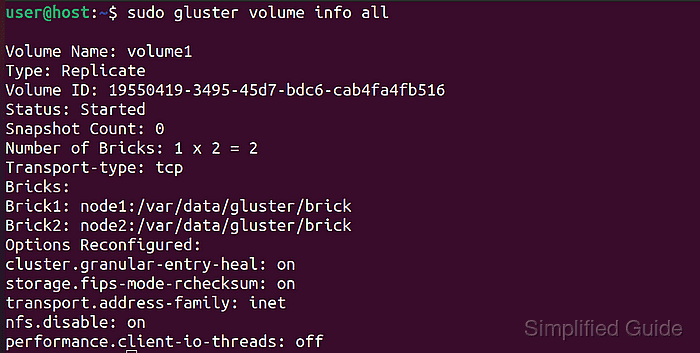

- Verify the GlusterFS volume details from the first node.

$ sudo gluster volume info all Volume Name: volume1 Type: Replicate Volume ID: 19550419-3495-45d7-bdc6-cab4fa4fb516 Status: Started Snapshot Count: 0 Number of Bricks: 1 x 2 = 2 Transport-type: tcp Bricks: Brick1: node1:/var/data/gluster/brick Brick2: node2:/var/data/gluster/brick Options Reconfigured: cluster.granular-entry-heal: on storage.fips-mode-rchecksum: on transport.address-family: inet nfs.disable: on performance.client-io-threads: off

- Verify that all bricks are online from the first node.

$ sudo gluster volume status volume1 Status of volume: volume1 Gluster process TCP Port RDMA Port Online Pid ------------------------------------------------------------------------------ Brick node1:/var/data/gluster/brick 49152 0 Y 2147 Brick node2:/var/data/gluster/brick 49152 0 Y 2099 Self-heal Daemon on node1 N/A N/A Y 2318 Self-heal Daemon on node2 N/A N/A Y 2284 Task Status of Volume volume1 ------------------------------------------------------------------------------ There are no active volume tasks

Mohd Shakir Zakaria is a cloud architect with deep roots in software development and open-source advocacy. Certified in AWS, Red Hat, VMware, ITIL, and Linux, he specializes in designing and managing robust cloud and on-premises infrastructures.