Website owners tell web spiders such as Googlebot what can and can't be crawled on their websites using robots.txt file. The file resides on the root directory of a website and contains rules such as the following;

User-agent: * Disallow: /secret Disallow: password.txt

A good web spider will first read the robots.txt file and adhere to the rule, though it's not compulsory.

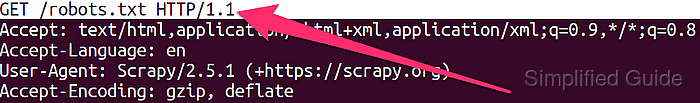

Conducting a scrapy crawl command for a project will first look for the robots.txt file and abide by all the rules.

$ scrapy crawl simplified

2022-01-21 19:19:18 [scrapy.utils.log] INFO: Scrapy 2.5.1 started (bot: simplifiedguide)

##### snipped

{'BOT_NAME': 'simplifiedguide',

'NEWSPIDER_MODULE': 'simplifiedguide.spiders',

'ROBOTSTXT_OBEY': True,

'SPIDER_MODULES': ['simplifiedguide.spiders']}

##### snipped

2022-01-21 19:19:18 [scrapy.middleware] INFO: Enabled downloader middlewares:

['scrapy.downloadermiddlewares.robotstxt.RobotsTxtMiddleware',

##### snipped

2022-01-21 19:19:18 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://www.simplified.guide/robots.txt> (referer: None)

You can ignore robots.txt for your Scrapy spider by using the ROBOTSTXT_OBEY option and set the value to False.

Steps to ignore robots.txt for Scrapy spiders:

- Open the robots.txt file of the website that you want to test your Scrapy spider (optional).

$ curl https://www.simplified.guide/robots.txt User-agent: * Disallow: /*?do* Disallow: /*?mode* Disallow: /_detail/ Disallow: /_export/ Disallow: /talk/ Disallow: /wiki/ Disallow: /tag/

- Crawl a website normally using scrapy crawl command for your project to use the default to adhere to robots.txt rules.

$ scrapy crawl simplified 2022-01-21 19:27:28 [scrapy.utils.log] INFO: Scrapy 2.5.1 started (bot: simplifiedguide) 2022-01-21 19:27:28 [scrapy.utils.log] INFO: Versions: lxml 4.7.1.0, libxml2 2.9.12, cssselect 1.1.0, parsel 1.6.0, w3lib 1.22.0, Twisted 21.7.0, Python 3.9.7 (default, Sep 10 2021, 14:59:43) - [GCC 11.2.0], pyOpenSSL 20.0.1 (OpenSSL 1.1.1l 24 Aug 2021), cryptography 3.3.2, Platform Linux-5.13.0-27-generic-aarch64-with-glibc2.34 2022-01-21 19:27:28 [scrapy.utils.log] DEBUG: Using reactor: twisted.internet.epollreactor.EPollReactor 2022-01-21 19:27:28 [scrapy.crawler] INFO: Overridden settings: {'BOT_NAME': 'simplifiedguide', 'NEWSPIDER_MODULE': 'simplifiedguide.spiders', 'ROBOTSTXT_OBEY': True, ##### snipped - Use set option to set ROBOTSTXT_OBEY option to False when crawling to ignore robots.txt rules.

$ scrapy crawl --set=ROBOTSTXT_OBEY='False' simplified 2022-01-21 19:28:33 [scrapy.utils.log] INFO: Scrapy 2.5.1 started (bot: simplifiedguide) 2022-01-21 19:28:33 [scrapy.utils.log] INFO: Versions: lxml 4.7.1.0, libxml2 2.9.12, cssselect 1.1.0, parsel 1.6.0, w3lib 1.22.0, Twisted 21.7.0, Python 3.9.7 (default, Sep 10 2021, 14:59:43) - [GCC 11.2.0], pyOpenSSL 20.0.1 (OpenSSL 1.1.1l 24 Aug 2021), cryptography 3.3.2, Platform Linux-5.13.0-27-generic-aarch64-with-glibc2.34 2022-01-21 19:28:33 [scrapy.utils.log] DEBUG: Using reactor: twisted.internet.epollreactor.EPollReactor 2022-01-21 19:28:33 [scrapy.crawler] INFO: Overridden settings: {'BOT_NAME': 'simplifiedguide', 'NEWSPIDER_MODULE': 'simplifiedguide.spiders', 'ROBOTSTXT_OBEY': 'False', - Open Scrapy's configuration file in your project folder using your favorite editor.

$ vi simplifiedguide/settings.py

- Go to the ROBOTSTXT_OBEY option.

# Obey robots.txt rules ROBOTSTXT_OBEY = True

- Set the value to False.

ROBOTSTXT_OBEY = False

- Run the crawl command again without having to specify the ROBOTSTXT_OBEY option.

$ scrapy crawl simplified 2022-01-21 19:29:53 [scrapy.utils.log] INFO: Scrapy 2.5.1 started (bot: simplifiedguide) 2022-01-21 19:29:53 [scrapy.utils.log] INFO: Versions: lxml 4.7.1.0, libxml2 2.9.12, cssselect 1.1.0, parsel 1.6.0, w3lib 1.22.0, Twisted 21.7.0, Python 3.9.7 (default, Sep 10 2021, 14:59:43) - [GCC 11.2.0], pyOpenSSL 20.0.1 (OpenSSL 1.1.1l 24 Aug 2021), cryptography 3.3.2, Platform Linux-5.13.0-27-generic-aarch64-with-glibc2.34 2022-01-21 19:29:53 [scrapy.utils.log] DEBUG: Using reactor: twisted.internet.epollreactor.EPollReactor 2022-01-21 19:29:53 [scrapy.crawler] INFO: Overridden settings: {'BOT_NAME': 'simplifiedguide', 'NEWSPIDER_MODULE': 'simplifiedguide.spiders', 'SPIDER_MODULES': ['simplifiedguide.spiders']} 2022-01-21 19:29:53 [scrapy.extensions.telnet] INFO: Telnet Password: 1590cbf195f138f6

Author: Mohd

Shakir Zakaria

Mohd Shakir Zakaria is a cloud architect with deep roots in software development and open-source advocacy. Certified in AWS, Red Hat, VMware, ITIL, and Linux, he specializes in designing and managing robust cloud and on-premises infrastructures.

Mohd Shakir Zakaria is a cloud architect with deep roots in software development and open-source advocacy. Certified in AWS, Red Hat, VMware, ITIL, and Linux, he specializes in designing and managing robust cloud and on-premises infrastructures.

Discuss the article:

Comment anonymously. Login not required.