Remote backups for Linux servers reduce data loss when disks fail, hosts are compromised, or files are removed by mistake. Maintaining a second copy of important data on a separate host keeps recovery options available even if the primary system or storage cannot be restored.

Using rsync over SSH transfers only changed data through an encrypted channel, which keeps backup windows short and bandwidth usage efficient. A scheduled job managed by cron runs the same rsync command at fixed intervals, keeping the remote copy closely aligned with the source directories while remaining easy to script and extend.

Reliable backups depend on correct paths, stable connectivity, and secure access control. The configuration described here targets non-root user data on standard Linux systems and assumes SSH access between the source and backup hosts. Extra care is required for databases, open virtual machine images, and system directories, and the use of options such as --delete warrants attention because a mistake can remove expected files from the backup location.

Steps to configure automatic remote backups on Linux:

- Ensure passwordless SSH login from the local machine to the remote backup server is configured for the backup user.

$ ssh backupuser@localhost 'echo backup-ssh-ok' backup-ssh-ok

Public key authentication enables non-interactive SSH connections that cron can run without prompting for passwords.

- Create a dedicated backup directory on the remote server.

$ mkdir -p ~/backup_folder/folder_01

Using a separate directory keeps backup data isolated from regular user files and simplifies restore operations.

- Set restrictive permissions on the backup directory for the backup account.

$ chmod -R 700 ~/backup_folder/folder_01

Granting overly permissive modes such as 777 can expose backup data to other users on the remote host and increase the impact of a compromise.

- Run rsync once manually from the local machine to confirm the backup path and options.

$ rsync -av --delete /home/backupuser/folder_01/ backupuser@localhost:backup_folder/folder_01 sending incremental file list ./ example.txt sent 157 bytes received 38 bytes 390.00 bytes/sec total size is 12 speedup is 0.06

The --delete option removes files from the remote backup that no longer exist on the source path, so an incorrect source directory can delete valid backup data.

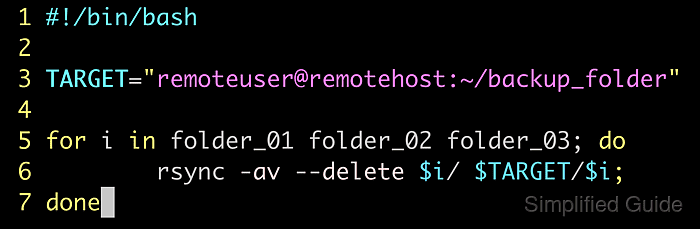

Sample of a more complete script for automated backup.

- backup.sh

#!/bin/bash TARGET="backupuser@localhost:~/backup_folder" for i in folder_01 folder_02 folder_03; do rsync -av --delete "$i"/ "$TARGET"/"$i"; done

- Verify that the expected files now exist in the remote backup directory.

$ ls -l ~/backup_folder/folder_01 total 4 -rw-rw-r-- 1 backupuser backupuser 12 Jan 13 22:21 example.txt

- Open the user crontab on the local machine for editing.

$ crontab -e No modification made

cron maintains separate schedules per user, so the backup job runs with the same permissions as the account that owns the data.

- Add a scheduled rsync entry that runs the backup every night at midnight and logs output to a file.

# Run backup command every day at midnight, sending the logs to a file. 0 0 * * * rsync -av --delete /home/backupuser/folder_01/ backupuser@localhost:backup_folder/folder_01 >>"$HOME"/.backup.log 2>&1

Always verify the source and destination paths before saving a cron job that uses --delete because a typo can silently remove expected files from the backup location.

- List the installed crontab to confirm the backup entry is present.

$ crontab -l # Run backup command every day at midnight, sending the logs to a file. 0 0 * * * rsync -av --delete /home/backupuser/folder_01/ backupuser@localhost:backup_folder/folder_01 >>/home/backupuser/.backup.log 2>&1

- Monitor the backup log after the first scheduled run to confirm the job finishes successfully.

$ tail -n 5 /home/backupuser/.backup.log sending incremental file list sent 95 bytes received 12 bytes 214.00 bytes/sec total size is 12 speedup is 0.11

Regularly reviewing the log helps detect connectivity issues, permission errors, or path mistakes before they affect recovery options.

Mohd Shakir Zakaria is a cloud architect with deep roots in software development and open-source advocacy. Certified in AWS, Red Hat, VMware, ITIL, and Linux, he specializes in designing and managing robust cloud and on-premises infrastructures.