Large file transfers often fail because of unstable connections, power issues, or temporary server problems, and restarting from the beginning wastes both time and bandwidth. Resuming an interrupted transfer with cURL continues from the last completed portion instead of re-downloading data that has already been saved locally. This approach is especially useful when working with multi‑gigabyte images, archives, or backups over long‑distance links.

Download resumption relies on server support for HTTP Range requests, where the client asks for bytes starting at a specific offset in the file. cURL provides the --continue-at (short form ‑C) option to resume based on the existing file size, sending a range request and appending the remaining data to the partial file. When the server responds with partial content, the result is a single, complete file made from both the original and resumed transfer.

Not every server accepts range requests or keeps the underlying file stable across interruptions, and resuming against a changed or corrupted partial file can generate invalid output. A partial file must remain on disk with the same name used for the original download, and integrity checks such as SHA‑256 hashes or trusted file size references help confirm that the resumed result is correct before use in production workflows.

Steps to resume a download with cURL:

- Open a terminal in an environment where cURL is installed and available on the command path.

- Start a new download of a large file using cURL and --remote-name so the local filename is derived from the URL.

$ curl --range 0-1048575 --remote-name "http://downloads.example.net/dataset.bin" % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 100 1024k 100 1024k 0 0 118M 0 --:--:-- --:--:-- --:--:-- 125M ##### snipped #####

The progress meter demonstrates that bytes are being written to the file dataset.bin on local storage.

- Interrupt the transfer so that a partial file remains on disk for later resumption.

Stopping the transfer with Ctrl+C cancels the HTTP request but leaves the partially downloaded file in place instead of deleting it.

- Confirm that the partial file exists and note its current size.

$ ls -lh dataset.bin -rw-r--r-- 1 root root 1.0M Jan 10 05:33 dataset.bin

A size significantly smaller than the expected total indicates that the file is incomplete and safe to use as a resume starting point.

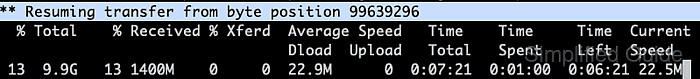

- Resume the download from the last received byte using --continue-at - together with the same URL and filename.

$ curl --continue-at - --remote-name "http://downloads.example.net/dataset.bin" ** Resuming transfer from byte position 1048576 % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed ##### snipped ##### 100 2048k 100 2048k 0 0 444M 0 --:--:-- --:--:-- --:--:-- 500M

If the server does not support HTTP Range or the file on the server has changed since the original download, the resumed content can be invalid; in that case, remove the partial file and repeat the download without --continue-at.

The value --continue-at - automatically uses the current size of the local file as the starting offset, avoiding manual byte calculations.

- Verify that the resumed download completed successfully by checking the final size and comparing a checksum against a trusted reference.

$ ls -lh dataset.bin -rw-r--r-- 1 root root 3.0M Jan 10 05:33 dataset.bin $ sha256sum dataset.bin 5bcd44e1ae4d34173b36402d6b2f1ecdb3460aac1d66937a5fcc92beb5ec6779 dataset.bin

Matching file size and checksum values confirm a correct resume; if verification fails, delete the file with rm dataset.bin and perform a fresh download from the beginning.

Mohd Shakir Zakaria is a cloud architect with deep roots in software development and open-source advocacy. Certified in AWS, Red Hat, VMware, ITIL, and Linux, he specializes in designing and managing robust cloud and on-premises infrastructures.