Load testing exposes how a web endpoint behaves under pressure, revealing throughput limits, latency spikes, and error rates before real traffic hits production.

ApacheBench (ab) is a lightweight command-line load generator that repeatedly requests a single URL path using a configurable total request count (-n) and concurrency level (-c), then reports metrics such as Requests per second, Time per request, transfer rate, and latency percentiles.

Because ab targets one URL at a time over HTTP/1.x, results do not model full browser behavior (multiple assets, HTTP/2 multiplexing, client-side rendering), and high concurrency can shift the bottleneck to the load generator host itself. Run tests only where permission exists, start conservatively to avoid accidental denial-of-service, and account for common pitfalls such as missing trailing slashes and variable response sizes.

Steps to load-test web server using ab:

- Choose a single benchmark URL that includes a path ending in a trailing slash.

http://host.example.net/

URLs without a path are rejected by ab.

$ ab -n 1 -c 1 http://host.example.net ab: invalid URL Usage: ab [options] [http[s]://]hostname[:port]/path ##### snipped #####

- Run a small warm-up test to confirm reachability and prime caches.

$ ab -n 50 -c 5 http://host.example.net/ ##### snipped ##### Complete requests: 50 Failed requests: 0

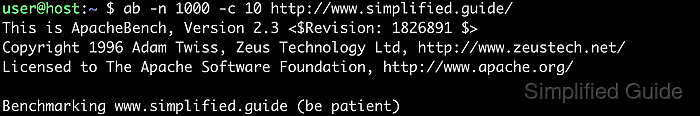

- Execute a basic load test with a fixed request count and concurrency level.

$ ab -n 1000 -c 10 http://host.example.net/ This is ApacheBench, Version 2.3 <$Revision: 1903618 $> Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/ Licensed to The Apache Software Foundation, http://www.apache.org/ Benchmarking host.example.net (be patient) Completed 100 requests Completed 200 requests Completed 300 requests Completed 400 requests Completed 500 requests Completed 600 requests Completed 700 requests Completed 800 requests Completed 900 requests Completed 1000 requests Finished 1000 requests Server Software: Apache/2.4.58 Server Hostname: host.example.net Server Port: 80 Document Path: / Document Length: 10671 bytes Concurrency Level: 10 Time taken for tests: 0.063 seconds Complete requests: 1000 Failed requests: 0 Total transferred: 10945000 bytes HTML transferred: 10671000 bytes Requests per second: 15822.28 [#/sec] (mean) Time per request: 0.632 [ms] (mean) Time per request: 0.063 [ms] (mean, across all concurrent requests) Transfer rate: 169116.11 [Kbytes/sec] received Connection Times (ms) min mean[+/-sd] median max Connect: 0 0 0.1 0 1 Processing: 0 0 0.2 0 1 Waiting: 0 0 0.1 0 1 Total: 0 1 0.3 1 2 Percentage of the requests served within a certain time (ms) 50% 1 66% 1 75% 1 80% 1 90% 1 95% 1 98% 1 99% 1 100% 2 (longest request)Common pattern: ab -n N -c C [-k] http://host/.

- Focus on Failed requests and Non-2xx responses to validate correctness before comparing performance.

Requests per second tracks throughput, while the first Time per request line is mean end-to-end latency per request.

- Increase -n and -c to model realistic peak traffic scenarios.

$ ab -n 10000 -c 50 http://host.example.net/

Large -c values can overwhelm the target and resemble a denial-of-service attack when run against production without coordination.

- Enable KeepAlive to measure the impact of connection reuse.

$ ab -n 10000 -c 50 -k http://host.example.net/

-k reuses connections where supported, reducing TCP and TLS handshake overhead.

- Accept variable document lengths when benchmarking dynamic pages.

$ ab -n 2000 -c 20 -l http://host.example.net/

Without -l, variable response sizes often appear as Failed requests: (Length).

- Add custom headers when a virtual host, API key, or bearer token is required.

$ ab -n 1000 -c 20 -H 'Host: host.example.net' -H 'Authorization: Bearer REDACTED' http://203.0.113.10/

Repeat -H for multiple headers, and use a literal IP plus Host header when DNS is not desired.

- Create a request body file before benchmarking a POST endpoint.

$ cat > payload.json <<'EOF' {"message":"hello"} EOF - Benchmark a POST endpoint with the body file and explicit content type header.

$ ab -n 500 -c 10 -p payload.json -T 'application/json' http://host.example.net/api/

POST load tests can create or modify data, so target an idempotent endpoint or a disposable test environment.

- Export timing and percentile data for plotting and comparisons.

$ ab -n 5000 -c 50 -k -e percentiles.csv -g times.tsv http://host.example.net/

-e writes a CSV percentile table and -g writes per-request timings in gnuplot-friendly format.

- Save the full output of a run to a file for repeatable comparisons.

$ ab -n 5000 -c 50 -k http://host.example.net/ > ab-5000-50-k.txt

- Extract headline metrics from saved outputs for quick side-by-side reviews.

$ grep -E 'Requests per second|Time per request|Failed requests|Non-2xx responses' ab-*.txt Failed requests: 105 Requests per second: 34414.88 [#/sec] (mean) Time per request: 1.453 [ms] (mean) Time per request: 0.029 [ms] (mean, across all concurrent requests)

- Display the built-in help when a test needs additional flags or troubleshooting options.

$ ab -h Usage: ab [options] [http[s]://]hostname[:port]/path Options are: -n requests Number of requests to perform -c concurrency Number of multiple requests to make at a time -t timelimit Seconds to max. to spend on benchmarking This implies -n 50000 -s timeout Seconds to max. wait for each response Default is 30 seconds -b windowsize Size of TCP send/receive buffer, in bytes -B address Address to bind to when making outgoing connections -p postfile File containing data to POST. Remember also to set -T -u putfile File containing data to PUT. Remember also to set -T -T content-type Content-type header to use for POST/PUT data, eg. 'application/x-www-form-urlencoded' Default is 'text/plain' -v verbosity How much troubleshooting info to print -w Print out results in HTML tables -i Use HEAD instead of GET -x attributes String to insert as table attributes -y attributes String to insert as tr attributes -z attributes String to insert as td or th attributes -C attribute Add cookie, eg. 'Apache=1234'. (repeatable) -H attribute Add Arbitrary header line, eg. 'Accept-Encoding: gzip' Inserted after all normal header lines. (repeatable) -A attribute Add Basic WWW Authentication, the attributes are a colon separated username and password. -P attribute Add Basic Proxy Authentication, the attributes are a colon separated username and password. -X proxy:port Proxyserver and port number to use -V Print version number and exit -k Use HTTP KeepAlive feature -d Do not show percentiles served table. -S Do not show confidence estimators and warnings. -q Do not show progress when doing more than 150 requests -l Accept variable document length (use this for dynamic pages) -g filename Output collected data to gnuplot format file. -e filename Output CSV file with percentages served -r Don't exit on socket receive errors. -m method Method name -h Display usage information (this message) -I Disable TLS Server Name Indication (SNI) extension -Z ciphersuite Specify SSL/TLS cipher suite (See openssl ciphers) -f protocol Specify SSL/TLS protocol (SSL2, TLS1, TLS1.1, TLS1.2, TLS1.3 or ALL) -E certfile Specify optional client certificate chain and private key - Re-run the same scenarios after each server change to validate improvements and catch regressions.

Keep the URL, payload, headers, -n / -c values, and network path consistent when comparing runs.

Tips for load-testing web server using ab:

- Run ApacheBench from a separate machine to avoid consuming server resources during the test.

- Prefer a staging or pre-production environment for high-concurrency tests.

- Warm up caches with a short run before collecting baseline numbers.

- Keep -n as a multiple of -c to distribute work evenly across concurrent workers.

- Use -t to cap test duration when response times are unpredictable.

- Use -q to suppress progress output for large request counts.

- Monitor CPU, memory, network, and disk I/O during the test to identify the real bottleneck.

- Repeat each test multiple times and compare medians to reduce the impact of transient noise.

- Test with and without -k to quantify the effect of connection reuse.

- Use -l for dynamic endpoints where Document Length varies between requests.

- Remember that ab does not model browser waterfalls or HTTP/2 multiplexing, so validate assumptions with additional tooling when needed.

- Keep detailed records of test parameters, server versions, and configuration changes for reproducible comparisons.

Mohd Shakir Zakaria is a cloud architect with deep roots in software development and open-source advocacy. Certified in AWS, Red Hat, VMware, ITIL, and Linux, he specializes in designing and managing robust cloud and on-premises infrastructures.